OFFER Pass4sure and Lead2pass 70-466 PDF & VCE (91-100)

QUESTION 91

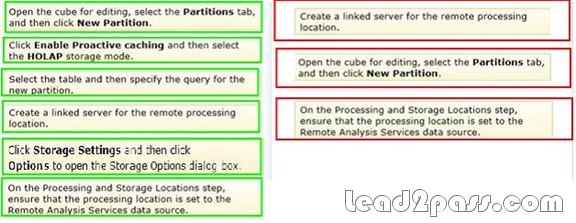

Drag and Drop Questions

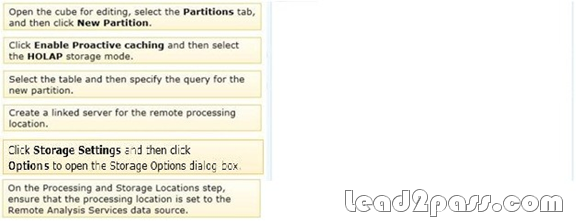

You are developing a SQL Server Analysis Services (S5AS) multidimensional project that is configured to source data from a SQL Azure database. You plan to use multiple servers to process different partitions simultaneously. You create and configure a new data source. You need to create a new partition and configure SQL Server Analysis Services (SSAS) to use a remote server to process data contained within the partition. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Explanation:

* You create a remote partition using the Partition Wizard. On the Specify Processing and Storage Options page, for the Remote Analysis Services data source, specify the dedicated database on the remote instance of Analysis Services. This instance of Analysis Services is called the remote server of the remote partition. For Storage location, you can specify the default data location for the remote server or a specified folder on the server.

You must create an Analysis Services database on the remote server and provide appropriate security settings. An additional OLAP data source is created on the remote database pointing to the server on which the partition is defined. The MasterDatasourceID property setting on the remote database points to the data source which, in turn, points to the master server. This property is only set on a database that contains remote partitions. The RemoteDatasourceID property setting on the remote partition specifies the ID of the OLAP data source on the master server that points to the remote server. A remote database can only host remote partitions for a single server.

* Before you create a remote partition, the following conditions must be met:

The domain user account for the local instance of Analysis Services must have administrative access to the remote database.

Reference: Creating and Managing a Remote Partition

QUESTION 92

You have a database for a mission-critical web application. The database is stored on a SQL Server 2012 instance and is the only database on the instance. The application generates all T-SQL statements dynamically and does not use stored procedures. You need to maximize the amount of memory available for data caching. Which advanced server option should you modify?

A. scan for Startup Procs

B. Allow Triggers to Fire Others

C. Enable Contained Databases

D. Optimize for Ad hoc Workloads

Answer: C

QUESTION 93

You have a database named database1. Database developers report that there are many deadlocks. You need to implement a solution to monitor the deadlocks. The solution must meet the following requirements:

– Support real-time monitoring.

– Be enabled and disabled easily.

– Support querying of the monitored data.

What should you implement? More than one answer choice may achieve the goal. Select the BEST answer.

A. an Extended Events session

B. a SQL Server Profiler template

C. log errors by using trace flag 1204

D. log errors by using trace flag 1222

Answer: A

QUESTION 94

You plan to design an application that temporarily stores data in a SQL Azure database. You need to identify which types of database objects can be used to store data for the application. The solution must ensure that the application can make changes to the schema of a temporary object during a session. Which type of objects should you identify?

A. common table expressions (CTEs)

B. table variables

C. temporary tables

D. temporary stored procedures

Answer: C

QUESTION 95

You have a SQL Server 2012 instance that hosts a single-user database. The database does not contain user-created stored procedures or user-created functions. You need to minimize the amount of memory used for query plan caching. Which advanced server option should you modify?

A. Enable Contained Databases

B. Allow Triggers to Fire Others

C. Optimize for Ad hoc Workloads

D. Scan for Startup Procs

Answer: C

QUESTION 96

You are developing a SQL Server Analysis Services (SSAS) multidimensional database.

The underlying data source does not have a time dimension table. You need to implement a time dimension.

What should you do?

A. Add an existing SSAS database time dimension as a cube dimension.

B. Use the SQL Server Data Tools Dimension Wizard and generate a time table on the server.

C. Use the SQL Server Data Tools Dimension Wizard and generate a time table in the data source.

D. Use the SQL Server Data Tools Dimension Wizard and generate a time dimension by using the Use an existing table option.

E. Create a CSV file with time data and use the DMX IMPORT statement to import data from the CSV file.

F. Create a time dimension by using the Define time intelligence option in the Business Intelligence Wizard.

G. Create a time dimension by using the Define dimension intelligence option in the Business Intelligence Wizard.

H. Create a script by using a sample time dimension from a different multidimensional database. Then create a new dimension in an existing multidimensional database by executing the script.

Answer: B

QUESTION 97

You are working with multiple tabular models deployed on a single SQL Server Analysis Services (SSAS) instance.

You need to ascertain the memory consumed by each object in the SSAS instance.

What should you do?

A. Use the $System.discover_object_memory_usage dynamic management view.

B. Use the Usage Based Optimization wizard to design appropriate aggregations.

C. Use SQL Server Profiler to review session events for active sessions.

D. Use the Performance Counter group named Processing.

Answer: A

QUESTION 98

Hotspot Question

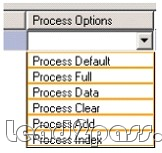

You maintain a multidimensional Business Intelligence Semantic Model (BISM) that was developed with default settings.

The model has one cube and the cube has one measure group. The measure group is based on a very large fact table and is partitioned by month. The fact table is incrementally loaded each day with approximately 800,000 new rows.

You need to ensure that all rows are available in the cube while minimizing the processing time.

Which processing option should you use? (To answer, select the appropriate option in the answer area.)

QUESTION 99

You are planning to develop a SQL Server Analysis Services (SSAS) tabular project. The project will be deployed to a SSAS server that has 16 GB of RAM.

The project will source data from a SQL Server 2012 database that contains a fact table named Sales. The fact table has more than 60 billion rows of data.

You need to select an appropriate design to maximize query performance.

Which data access strategy should you use? (More than one answer choice may achieve the goal. Select the BEST answer.)

A. Configure the database to use DirectQuery mode. Create a columnstore index on all the columns of the fact table.

B. Configure the database to use In-Memory mode. Create a clustered index which includes all of the foreign key columns of the fact table.

C. Configure the database to use In-Memory mode. Create a columnstore index on all the columns of the fact table.

D. Configure the database to use DirectQuery mode. Create a clustered index which includes all of the foreign key columns of the fact table.

Answer: A

Case Study 1: Scenario 1 (Question 100 – Question 109)

General Background

You are the data architect for a company that uses SQL Server 2012 Enterprise Edition.

You design data modeling and reporting solutions that are based on a sales data warehouse.

Background

The solutions will be deployed on the following servers:

– ServerA runs SQL Server Database Engine. ServerA is the data warehouse server.

– ServerB runs SQL Server Database Engine, SQL Server Analysis Services (SSAS) in multidimensional mode, and SQL Server Integration Services (SSIS).

– ServerC runs SSAS in tabular mode, SQL Server Reporting Services (SSRS) running in SharePoint mode, and Microsoft SharePoint 2010 Enterprise Edition with SP1.

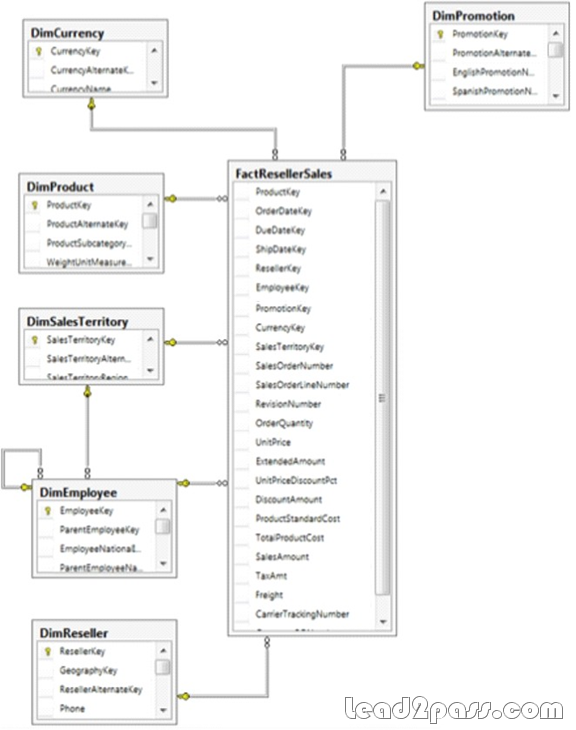

The data warehouse schema currently contains the tables shown in the exhibit. (Click the Exhibit button.)

Business Requirements

The reporting solution must address the requirements of the sales team, as follows:

– Team members must be able to view standard reports from SharePoint.

– Team members must be able to perform ad-hoc analysis by using Microsoft Power View and Excel.

– Team members can have standard reports delivered to them on a schedule of their choosing.

The standard reports:

– Will use a sales territory hierarchy for organizing data by region.

– Will be accessible from SharePoint.

The Excel ad-hoc reports:

– Will use the same data store as the standard reports.

– Will provide direct access to the data store for the sales team and a simplified view for the executive team.

Technical Requirements

The standard reports must be based on an SSAS cube. The schema of the data warehouse on ServerA must be able to support the ability to slice the fact data by the following dates:

– Order date (OrderDateKey)

– Due date DueDateKey

– Ship date (ShipDateKey)

Additions and modifications to the data warehouse schema must adhere to star schema design principles to minimize maintenance and complexity.

The multidimensional and tabular models will be based on the data warehouse. The tabular and multidimensional models will be created by using SQL Server Data Tools (SSDT). The tabular project is named AdhocReports and the multidimensional project is named StandardReports.

The cube design in the StandardReports project must define two measures for the unique count of sales territories (SalesTerritoryKey) and products (ProductKey).

A deployment script that can be executed from a command-line utility must be created to deploy the StandardReports project to ServerB.

The tabular model in the AdhocReports project must meet the following requirements:

A hierarchy must be created that consists of the SalesTerritoryCountry and SalesTerritoryRegion columns from the DimSalesTerritory table and the EmployeeName column from the DimEmployee table.

A key performance indicator (KPI) must be created that compares the total quantity sold (OrderQuantity) to a threshold value of 1,000. A measure must be created to calculate day-over-day (DOD) sales by region based on order date.

SSRS on ServerC must be configured to meet the following requirements:

– It must use a single data source for the standard reports.

– It must allow users to create their own standard report subscriptions.

– The sales team members must be limited to only viewing and subscribing to reports in the Sales Reports library.

A week after the reporting solution was deployed to production, Marc, a salesperson, indicated that he has never received reports for which he created an SSRS subscription. In addition, Marc reports that he receives timeout errors when running some reports on demand.

A\Batch 1

QUESTION 100

You need to deploy the StandardReports project.

What should you do? (Each correct answer presents a complete solution. Choose all that apply.)

A. Deploy the project from SQL Server Data Tools (SSDT).

B. Use the Analysis Services Deployment utility to create an XMLA deployment script.

C. Use the Analysis Services Deployment wizard to create an MDX deployment script.

D. Use the Analysis Services Deployment wizard to create an XMLA deployment script.

Answer: AD

Explanation:

There are several methods you can use to deploy a tabular model project. Most of the deployment methods that can be used for other Analysis Services projects, such as multidimensional, can also be used to deploy tabular model projects.

A: Deploy command in SQL Server Data Tools

The Deploy command provides a simple and intuitive method to deploy a tabular model project from the SQL Server Data Tools authoring environment.

Caution:

This method should not be used to deploy to production servers. Using this method can overwrite certain properties in an existing model.

D: The Analysis Services Deployment Wizard uses the XML output files generated from a Microsoft SQL Server Analysis Services project as input files. These input files are easily modifiable to customize the deployment of an Analysis Services project. The generated deployment script can then either be immediately run or saved for later deployment.

Incorrect:

not B: The Microsoft.AnalysisServices.Deployment utility lets you start the Microsoft SQL Server Analysis Services deployment engine from the command prompt. As input file, the utility uses the XML output files generated by building an Analysis Services project in SQL Server Data Tools (SSDT).

If you want to pass Microsoft 70-466 successfully, donot missing to read latest lead2pass Microsoft 70-466 practice tests.

If you can master all lead2pass questions you will able to pass 100% guaranteed.

http://www.lead2pass.com/70-466.html

Why Choose Lead2pass?

If you want to pass the exam successfully in first attempt you have to choose the best IT study material provider, in my opinion, Lead2pass is one of the best way to prepare for the exam.

|

|

|

|

|

|

|---|---|---|---|---|---|

|

|

|

|

|

|

| Lead2pass | Testking | Pass4sure | Actualtests | Others | |

| $99.99 | $124.99 | $125.99 | $189 | $29.99-$49.99 | |

| Up-to-Dated | ✔ | ✖ | ✖ | ✖ | ✖ |

| Real Questions | ✔ | ✖ | ✖ | ✖ | ✖ |

| Error Correction | ✔ | ✖ | ✖ | ✖ | ✖ |

| Printable PDF | ✔ | ✖ | ✖ | ✖ | ✖ |

| Premium VCE | ✔ | ✖ | ✖ | ✖ | ✖ |

| VCE Simulator | ✔ | ✖ | ✖ | ✖ | ✖ |

| One Time Purchase | ✔ | ✖ | ✖ | ✖ | ✖ |

| Instant Download | ✔ | ✖ | ✖ | ✖ | ✖ |

| Unlimited Install | ✔ | ✖ | ✖ | ✖ | ✖ |

| 100% Pass Guarantee | ✔ | ✖ | ✖ | ✖ | ✖ |

| 100% Money Back | ✔ | ✖ | ✖ | ✖ | ✖ |